Table of Contents

I recently participated in an AI for Business Transformation event where speakers showcased the practical application of ChatGPT. The demonstration illustrated how ChatGPT can expedite day-to-day tasks like creating travel itineraries, drafting email responses, and composing LinkedIn posts. Despite its early stage, the demo sparked curiosity regarding the potential applications of ChatGPT in a business setting.

As discussed in our previous blog, Generative AI has the capacity to revolutionize business operations across various domains. However, businesses face significant challenges in developing and successfully implementing Generative AI projects. In this blog post, we will delve into the primary challenges and outline simple yet effective steps that businesses can take to establish a pipeline of Generative AI projects within their customer service.

While the topics of deep learning and generative machine learning (ML) is getting a lot more attention from businesses, vast majority of ML and AI projects have traditionally failed to live up to expectations. According to McKinsey’s Global Survey, only about 15% of respondents successfully scaled AI across multiple parts of their business and only 36% said that ML algorithms had been deployed beyond the pilot stage. Even Gartner’s research showed only 53% of AI projects making it from prototypes to production. Two major reasons for the high rates of AI projects failure are not establishing business objectives clearly and selecting projects with poor data quality.

Businesses often find themselves enthusiastic about a specific AI solution, attempting to integrate it into their operations without a well-defined business problem and clear objectives. Success is more likely when businesses reverse the approach, moving from the solution back to the problem. The choice of the problem to address outweighs selecting a solution for an ambiguously defined problem statement. Moreover, a well-supported business problem should be accompanied by a robust dataset.

In the realm of Large Language Models (LLMs), the process of data ingestion plays a pivotal role in addressing specific business problems. This involves the intricate task of collecting substantial amounts of structured and unstructured data (via methods such as web scraping), preprocessing it through actions like indexing, labeling, and tokenization, and readying it for LLM training through prompt engineering.

Before we explore steps to develop a pipeline of GenAI projects, let us first understand the role of GenAI in customer service.

Role of GenAI in Customer Service

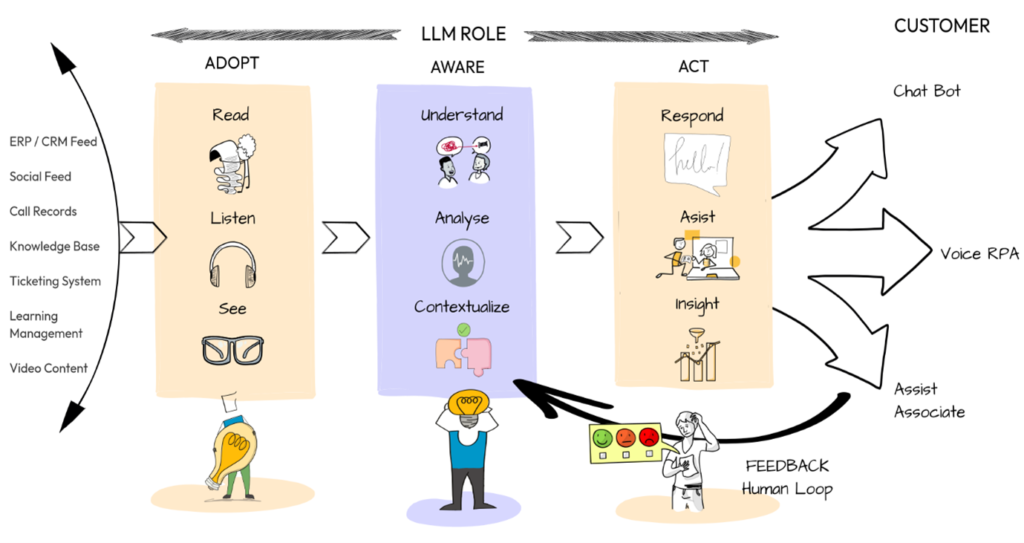

Generative AI has the potential to change the anatomy of workflow across the customer service function, thereby augmenting customer experience and productivity of customer service agents by automating some of their activities. With the advent of LLMs, the customer service workflow could undergo change as shown in the picture below.

There are three unique capabilities of LLMs that are in play here in the above workflow example.

- ADOPT: LLMs have the ability to not only read data from a variety of sources such as ERP, CRM, Knowledge Bases, etc., but also listen to, for example call recordings, as well as see any graphic or video content. This makes LLMs almost like humans to read, listen, see, and then process the information before acting. Within the customer service function, this ability can not only supplement human agents but also drastically improve the speed and accuracy of response to a customer.

- AWARE: As mentioned in the previous blog about key characteristics of GenAI, LLMs are remarkably intelligent in understanding customer queries and their intents and can analyse the vast repository of knowledge or data to retrieve the contextual information relevant to the customer query. This makes it an extremely important tool for customer service function with a huge potential to transform customer experience.

- ACT: There are multiple ways in which LLMs can be orchestrated to act – it can either respond to customers directly using chatbots or voice RPA (RPA-powered voice bot that can answer customers’ questions) or assist customer service agents making them more productive or even provide relevant insights such as personalized product recommendations to customers.

LLMs can also be configured to capture human feedback allowing them to learn from previous interactions and improve their response over time. As you can see above, LLMs really thrive on a large dataset and has capabilities that cuts across the lifecycle of a customer interaction. Now, let’s explore how to go about creating a pipeline of GenAI projects in customer service.

Five-step Approach

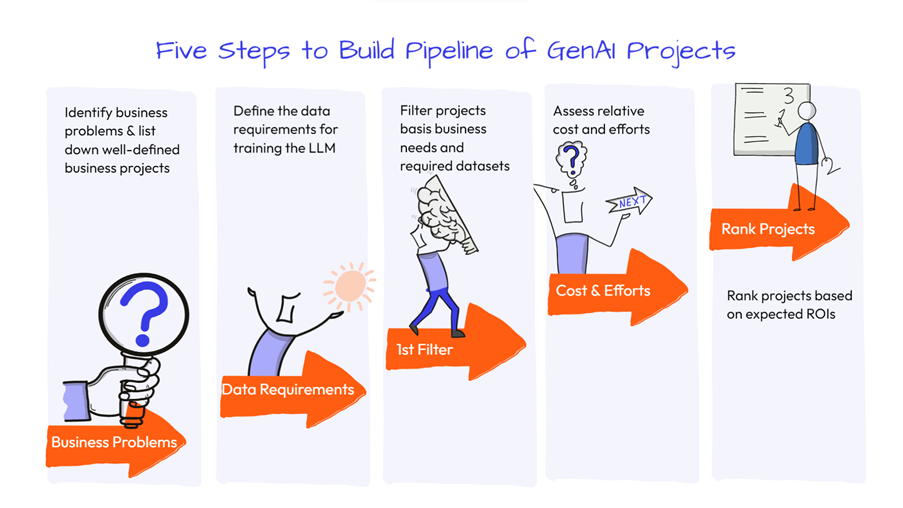

The five-step approach that we prescribe is more commonsensical rather than anything else. It is based on our interactions with multiple stakeholders across businesses and numerous questions that they have when approaching this subject.

- Business Problems: As highlighted in the previous blog, GenAI has the potential to address multiple business problems be it addressing your regular customers queries, customer sentiment analysis, assisting agents in fast retrieval of the relevant information to serve your customers better, helping your quality analysts to score agents interactions fast and accurately, assisting your employees on various HR policies & procedures, assisting your prospective employees to answer queries about the company, culture, interviews, etc., answering help desk queries, and many more. Three key questions to ask yourself while putting down the list of business problem statements are –

- Is the existing human effort in a given business problem too high?

- Will the validation of GenAI output accuracy be easy?

- Does the business problem have high usage frequency?

GenAI will produce best results in problem areas where existing human effort (without GenAI) is too high, while validation of GenAI output accuracy is easy. If a task requires effort to execute but is easy to validate, it might be a good problem to solve. Also, areas with high usage frequency are preferred, as there will be more example data to fine-tune and improve the LLM, and subsequently a more substantial impact.

Also, many customer service leaders ask if they should look at their own problem statements or even look at solving their clients’ problems as it may cannibalise their revenues as a service provider to them. Our simple response to them is you better do it, else someone else will do.

- Data Requirements: Once you have created a long list of business problems that can be addressed using GenAI, the next most critical task is to determine data requirements for training the LLM for each of those business needs. Customer service workflows generate structured user data. However, a lot of this customer data sits behind the clients’ firewalls and not easily available. For example, if you are looking to automate quality assurance and score 100% of agents’ interactions leveraging GenAI, you would need your clients’ permission to access customer interactions with agents that may take months to come by. So, it’s always good to ask the following two questions to determine data needs relevant to the task the LLM is expected to be trained for –

- Is clean, reliable, and timely data available for the given business need?

- Do you have access to that data, or can you gain access to data in a reasonable time frame?

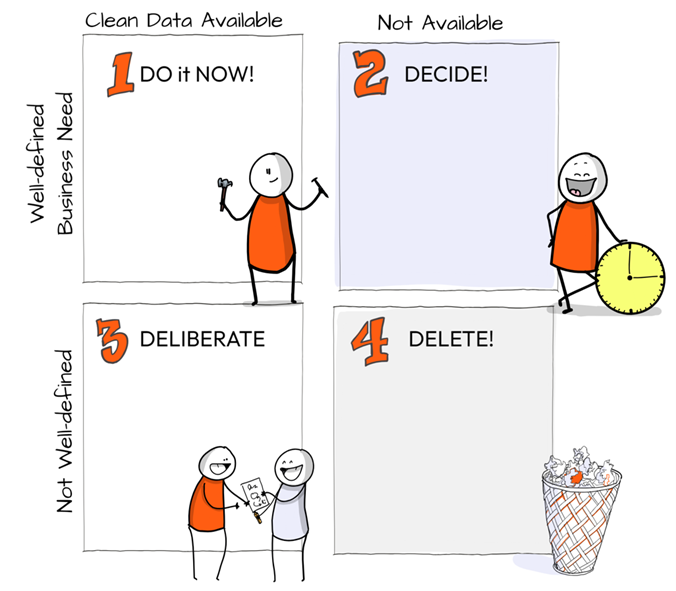

- 1st Filter: Once you have created your list of business problems and determined data requirements, it’s time to apply the first filter to prioritize projects with a well-defined business problem and available clean datasets. This can be done using the simple filteration matrix as follows

- Cost & Effort: Not all business projects that fall under the 1st matrix in the above picture are winners. You still need to assess the relative cost and efforts involved in implementing these projects using LLMs to identify the real potential winners. Unlike some traditional AI or automation projects, success of GenAI projects depends a lot more on the amount of data that is required to train the LLM. Larger the dataset, the better it is but it will also result in higher fine-tuning and tokenization cost, which if not kept in check can blow out of proportion similar to the cloud cost. In such case, one of the key considerations is the reusability of AI and data components. For example, if the LLM is being trained to perform customer sentiment analysis by collecting a large number of customer reviews, comments, and social media posts, is it possible to re-use this across multiple projects rather than just one?

- Rank projects: The last step in the process is to rank your GenAI projects not only using the cost and efforts involved but also potential ROIs. It is important to understand what kind of ROI measures will help identify winner projects. This shall include reduction in queries handled by live agents, increased CSAT scores and reduced customer efforts, faster response time by agents, increased productivity of agents, improved employee experience, increased productivity of support staff such as hiring team, faster response time in helpdesk queries and much more.

You will need a cross-functional team throughout the process to ensure that well-informed decisions are taken in qualifying and selecting potential GenAI projects. This cross-functional team shall include people who understand the customer service function, the data flow across the value chain, and the ML capabilities.

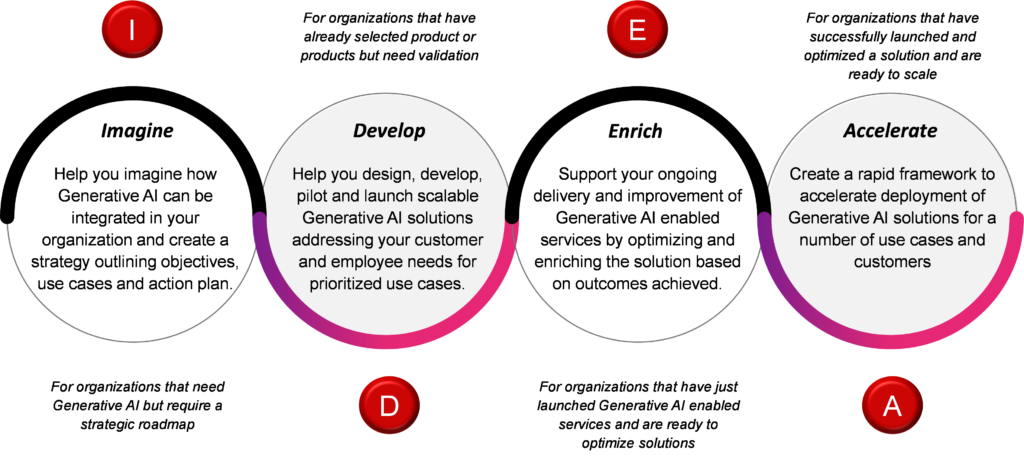

At Ingroup Consulting, we are passionate about helping businesses transform their customer experience by leveraging GenAI capabilities. We have developed our proprietary framework called IDEA – Imagine, Develop, Enrich and Accelerate – to help organizations in their journey to explore and adopt Generative AI. If you are interested in learning more about how you can use Generative AI to transform experience for your customers, we would love to talk. Write to us at info@ingroupconsult.com for more information.

Stay tuned for our next blog on “Building LLM-based Chatbot for Addressing Customer Queries from a Knowledge Base”.

Images created using Drawify.